► How Tesla’s self-driving tech works

► Machine learning is used

► And it’s only going to get better

In scenes looking worryingly similar to Terminator, Tesla has released footage of what its Autopilot driver-assist system actually sees when it’s scanning the road in the course of providing ‘semi-autonomous’ driving.

Tesla recently published an entire landing page dedicated to every aspect of the AI technology, highlighting its achievements in ‘deploying autonomy at scale’.

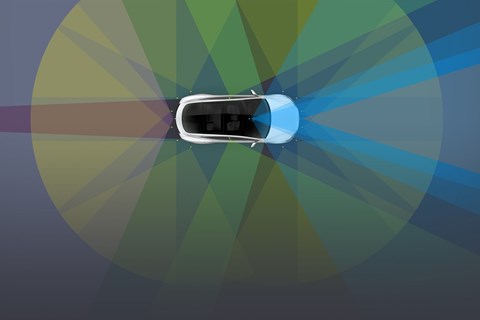

In the video on this page, you can see footage of Autopilot mapping out objects and distances in real time, all being monitored constantly to ensure the car doesn’t go off the rails – so to speak – and crash.

The information page is super-techy, so be warned if you wish to delve further into how the system works, but we’ll try and break down how Autopilot actually works in practice.

How Tesla’s Autopilot tech works

Essentially, using networked cameras positioned around the car, the software is able to detect and segment objects and decide how far away they are, and subsequently act on that information using car inputs like steering, brakes and acceleration.

What are the autonomous levels?

Using specially-built silicon chips that deal specifically with Autopilot, Tesla is able to build neural networks that can learn about the unique driving situations of nearly one million Teslas throughout the world in real time. This means that while a Tesla can learn about its direct surroundings using the cameras attached to the car, it can also draw on information that’s been gathered by other Teslas around the world to best decide what it should do.

As an aside, Tesla states that each Tesla Autopilot network involves 48 individual networks that take 70,000 GPU (that’s Graphical Processing Units) hours to train.

Using the algorithms

But information is useless without being acted upon. This is where Tesla’s autonomous algorithms come in. These complex equations essentially drive the car by dictating what Autopilot should do in a given situation. Think of it like this: Tesla designs algorithms that dictate the car should behave by doing X if Y is detected by the neural network. This is achieved by developing a planning and decision-making system that can robustly operate in a real-world situation. Think of it as a fancy, incredibly-complex flow chart.

All this technology ensures that as the car is moving along it can autonomously create a high-fidelity representation of the world, and calculate trajectories within that time and space, as you can see in the video bottom of the page.

If all this doesn’t sound like mumbo jumbo to you and you find yourself interested in what Tesla has to say, the Californian company is actively looking for help with its AI technology – so go ahead and register your interest on their Autopilot landing page.

For the rest of us, just enjoy the cool footage and quietly await doom as the robots take over.

This article originally appeared on whichcar.com.au