► The technology uses light to map surroundings

► Works along the same principles as radar

► Could be the key to more reliable self-driving cars

LiDAR stands for “Light Detection and Ranging.” It’s a form of remote sensing technology that has the potential to improve the accuracy of self-driving cars. As the name suggests, it works in a similar fashion to radar – but instead of using radio waves to detect nearby objects, it uses a pulsed laser.

We’ll get the worst of the technical info out of the way first. The radio waves used in radar systems have a much longer wavelength than the laser beams used in LiDAR – and that means radar has a much lower resolution. Think of radar like an old black and white polaroid camera and LiDAR as a modern DSLR. You can see much more detail in pictures taken by the latter.

Both systems work in the same way. A transmitter sends signals from the vehicle to its surroundings and, by keeping tabs how long it takes for the signals to bounce off nearby obstacles and return to the car, the system can build up a 3D map of its surroundings. Both technologies can also calculate the speed of approaching obstacles.

What are the benefits of LiDAR?

Its accuracy, primarily. Radar works reasonably well with Level 3 autonomous vehicles (like the system Mercedes is about to roll out on the new S-Class and EQS saloons) as they’re mainly used on the motorway, where the smallest obstacle the system needs to worry about is a motorbike.

It isn’t 100 percent reliable when it comes to avoiding an accident or debris on the carriageway. But with a Level 3 autonomous system the human driver is acting as the back-up, ready to retake control of the car if the system becomes overwhelmed.

However, more advanced Level 4 and Level 5 autonomous vehicles can operate independently of the driver, which means they need a more accurate method of hazard detection. This level of detail is especially important when the technology is being used to navigate two-tonne vehicles through narrow city streets, avoiding obstacles like bolting dogs, unruly children and wobbly cyclists.

LiDAR technology can also register the colour and size of obstacles – data that software engineers can use to make the car act in a specific manner when it’s near certain shapes. For example, you can programme an autonomous car to move over the white line when the LiDAR sensor picks up a cyclist-sized object on the road.

Nissan’s LiDAR technology

Car manufacturers are already developing prototype autonomous cars guided by LiDAR. Nissan recently announced it was working on its next-generation ProPilot driver assistance technology, which the company says will be in widespread use on public roads as soon as 2030.

Nissan’s new system uses an arsenal of sensors, including radar, cameras and LiDAR (which is that strange box mounted on the test car’s roof in the company’s images). The system is backed up by some powerful software which can simulate complicated accidents in real time and steer the car out of harm’s way without any intervention from the driver.

The brand’s marketing department named the technology under the rather spiritually dubious banner of “ground truth perception.” At least Nissan’s motives are sound – the development push forms part of the firm’s ambition to significantly reduce road traffic accidents. Nissan’s explainer video (linked below) shows how the technology works in simple terms.

Takao Asami, Nissan’s senior vice president of research and development, says: “Nissan has been the first to market a number of advanced driver assistance technologies. When we look at the future of autonomous driving, we believe that it is of utmost importance for owners to feel highly confident in the safety of their vehicle. “

“We are confident that our in-development ground truth perception technology will make a significant contribution to owner confidence, reduced traffic accidents and autonomous driving in the future.”

Volvo’s LiDAR technology

Volvo’s pure-electric replacement for the XC90 (pictured below) will also feature LiDAR technology, along with a sophisticated artificial intelligence programme that will have similar collision-dodging skills as Nissan’s system. The first test of the system on public roads was scheduled for mid-2022.

Once the system is on the road, Volvo plans to collect real-time data from drivers to make the technology better. The numbers gathered can then be crunched into an over-the-air software update and beamed from the Volvo R&D department straight to the car.

Volvo’s aims for LiDAR technology are typical of the brand. The company’s chief technology officer Henrik Green said: “In our ambition to deliver ever safer cars, our long-term aim is to achieve zero collisions and avoid crashes altogether. As we improve our safety technology continuously through updates over the air, we expect collisions to become increasingly rare and hope to save more lives.”

Argo AI’s LiDAR technology

Argo AI is a start-up technology company, which has partnered with Ford and Volkswagen for their autonomous vehicle projects. The firm’s in-house LiDAR detectors have made waves in the industry for their obstacle-detection capability. The image at the top of this story is a readout of what the sensor sees.

The technology can detect obstacles at a range of 400 metres – and the company says it doesn’t necessarily need the object to be reflective to register its position. The already has autonomous prototype vehicles on the roads in the United States around Miami and Austin. The company is running similar pilot schemes in eight major cities around the globe.

The schemes have been successful so far. Bryan Salesky, Argo AI’s founder and CEO, said: “Argo is first to go driverless in two major American cities, safely operating amongst heavy traffic, pedestrians and bicyclists in the busiest of neighbourhoods.

“From day one, we set out to tackle the hardest miles to drive — in multiple cities — because that’s where the density of customer demand is, and where our autonomy platform is developing the intelligence required to scale it into a sustainable business.”

LiDAR: the drawbacks

LiDAR technology is better than radar, but it isn’t perfect. Its accuracy can be affected by rain, snow and fog, as the weather absorbs a lot of the light being emitted from the system. There are also fears that LiDAR sensors may pick up interference from other LiDAR-equipped vehicles once the technology is in widespread use, which could give false readings of hazards.

There are concerns about costs, too. Early LiDAR-equipped prototype cars featured massive sensors on their roofs, which looked a bit like space-age traffic cones. They housed the rotating laser array required to stitch together a 360-degree image of the car’s surroundings. These older systems also cost around £50,000 per car when they were new, which made them cost-prohibitive.

Thankfully, manufacturers have been working on a more compact and cost-effective solution to this latter issue. The LiDAR sensors we have today are around the size of a paperback book and use a tiny electromechanical system to transmit the laser pulse. But progress means pound notes – and just like airbags and cruise control did when they were new, LiDAR will drive up the cost of cars.

Radar: the drawbacks

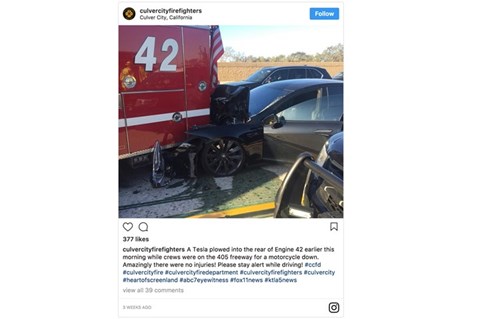

LiDAR only needs to be better than the technology we have today. And the current camera-and-radar based autonomous systems are hardly perfect. Back in 2018, a Tesla Model S rear-ended a fire engine on a dry, bright morning in Los Angeles while using the company’s Autopilot system.

Back then, the Tesla Autopilot system wasn’t clever enough to steer around obstacles, but the car’s radar sensor should have registered something the size of a fire engine as a hazard in the road and applied the brakes to avoid it.

The problem here is that assistance systems such as Tesla’s Autopilot are programmed to disregard stationary vehicles in most situations to prevent the software from hammering on the brakes every time it sees a parked car. The focus is on keeping a safe distance from moving vehicles.

As mentioned above, radar also isn’t as sharp as LiDAR. A radar image of a street looks like a mass of boxes – and there’s little indication about whether the object the autonomous car passes is a parked car, a skip or a lamppost. It’s down to software engineers to programme the system so that it ignores the insignificant signals, which introduces lots of room for error.